By Oliver Allchin & Cat Bazela

Literature reviews take ages. Having to find and evaluate different sources, explore and compare key information, theories and ideas, and work it all into some sort of synthesis - it’s all such a palaver.

Don’t worry though, AI can do all that tedious reading and thinking for you, summarising the entirety of human knowledge in seconds, leaving you free to do something presumably more interesting, like hoovering.

Or at least that’s the premise the latest raft of AI-assisted search tools seem to be built on, but can they really replicate the depth, thoroughness, insight and reliability of a human literature reviewer? Perhaps more importantly, do we want them to?

What is RAG?

Retrieval Augmented Generation or RAG systems combine generative AI models with information retrieval. The goal is to make the responses given by GenAI more accurate and reliable by supplementing their training data with information taken from verifiable sources.

When you enter a prompt or question, the AI turns this into a search, finds relevant sources (either from the web or a database or corpus of published literature) and summarises the top results with citations. Some tools let you edit and refine these plans as needed to guide the process. In theory, these tools allow you to use AI / LLM as a ‘research assistant’ that searches for and summarises information, with a short list of sources for further reading.

However, it’s important to be critical of the information provided by such tools to evaluate and verify any responses given. In this article, we take a look at some freely available RAG tools and undertake a light-touch assessment of the quality of their responses to our prompts.

Perplexity

Perplexity has a tool called "Deep Research" which is designed to "save you hours of time by conducting in-depth research and analysis on your behalf". The search refines with each iteration and then provides you with a report.

We used the prompt “How do universities in the UK typically induct their new and existing students into the digital tools, websites, apps and equipment that they will use during their studies? How do they ensure entry-level digital skills are taught, as well as an understanding of the ethical approaches to using technology?”

The generated output returned a structured report and provided information on how it identified the 46 references/sources which had been used in the creation of the report. All sources are listed, and upon checking, all were real. The first read-through seemed to produce a well-written report which drew on the resources which had been provided by the tool. It

The list of resources totalled 46, but only 6 were cited within the report produced. When looking at the 6 citations in the text, three were government web pages, two were web pages from Higher Education institutions, and one was a blog post list. It then became apparent that more of the sources had been used for information but not cited within the text. Similarly, it was prone to attributing information to the wrong source or amalgamating sources to talk about something that doesn’t exist (hallucination). The references cited did not represent the information presented in the report.

Gemini Deep Research

Gemini’s new feature is marketed as a 'research assistant' that will save you 'hours of work' and is available via Google Gemini.

Essentially, it Googles stuff for you and writes you a report of what it’s found. This report can be converted into an 'audio overview' so you can listen to it.

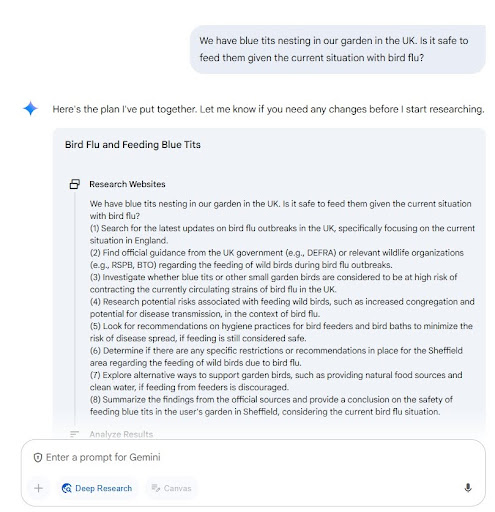

We used the prompt "I have blue tits nesting in our garden in the UK. Is it safe to feed them given the current situation with bird flu?"

It generated the following research plan, which is a set of questions it aims to answer. This is potentially helpful for users who are trying to think of research questions to use as the basis for a literature review.

We found the response generated by Deep Research was mostly useful and accurately reflected current UK guidance on feeding garden birds. However, the sources consulted were a mixed bag. Whilst it did consult appropriate sources such as Defra and the RSPB, there were several citations to the websites of bird food companies, which you might argue have a vested interest in the question of whether or not to feed birds. Despite identifying the city and country I made the request from, Deep Research made frequent references to US legislation and agencies that weren’t relevant to this context.

In the example below, guidance from Defra (a UK agency) is referenced. However, the first source cited is the blog of a company that sells bird feeders, the second is the Pennsylvania Game Commission website, which makes no mention of Defra guidelines:

Whilst the 2800-word report was detailed and mostly helpful, it didn’t necessarily provide more information than we found by simply going to the RSPB website.Ai2 Scholar QA

Ai2 Scholar QA is a free tool designed to "satisfy literature searches that require insights from multiple relevant documents, and synthesise those insights into a comprehensive report".

It uses Vespa, a corpus of around 8M open access academic papers mostly in science, engineering, environment and medicine. When you enter a question or prompt, it generates summaries with citations to key evidence from the literature, including tables comparing key themes from the papers it finds. It’s available to use free of charge without the need to sign up for an account.

We used the prompt “How can we encourage people to follow hygiene guidance during pandemics?”

This generated a seemingly coherent, well-structured report with citations to (mostly) real and relevant journal papers. The summary was a little generic and lacking in detail - full of broad brush statements rather than specific data or evidence. One nice feature is the literature comparison table, which summarises evidence from a handful of studies. However, there’s no indication why the papers in the table have been selected over the others cited in the summary.

Citations give no page numbers, but hovering over the citation brings up a snippet of the original text that’s being summarised. Often this bears little relation to the point being made - for example, a point about using serving utensils and avoiding shaking hands cited a text that didn’t appear to mention either.

We noticed instances where the citation refers to a section of a paper that is itself paraphrasing yet another paper (secondary citation).

We also noticed some citations to ‘LLM Memory’. Hovering over the citation displays the following text: "Generated by Anthropic Claude…we could not find any reference with evidence that supports this statement". Clearly, the model is prone to hallucination, but at least it flags these up.